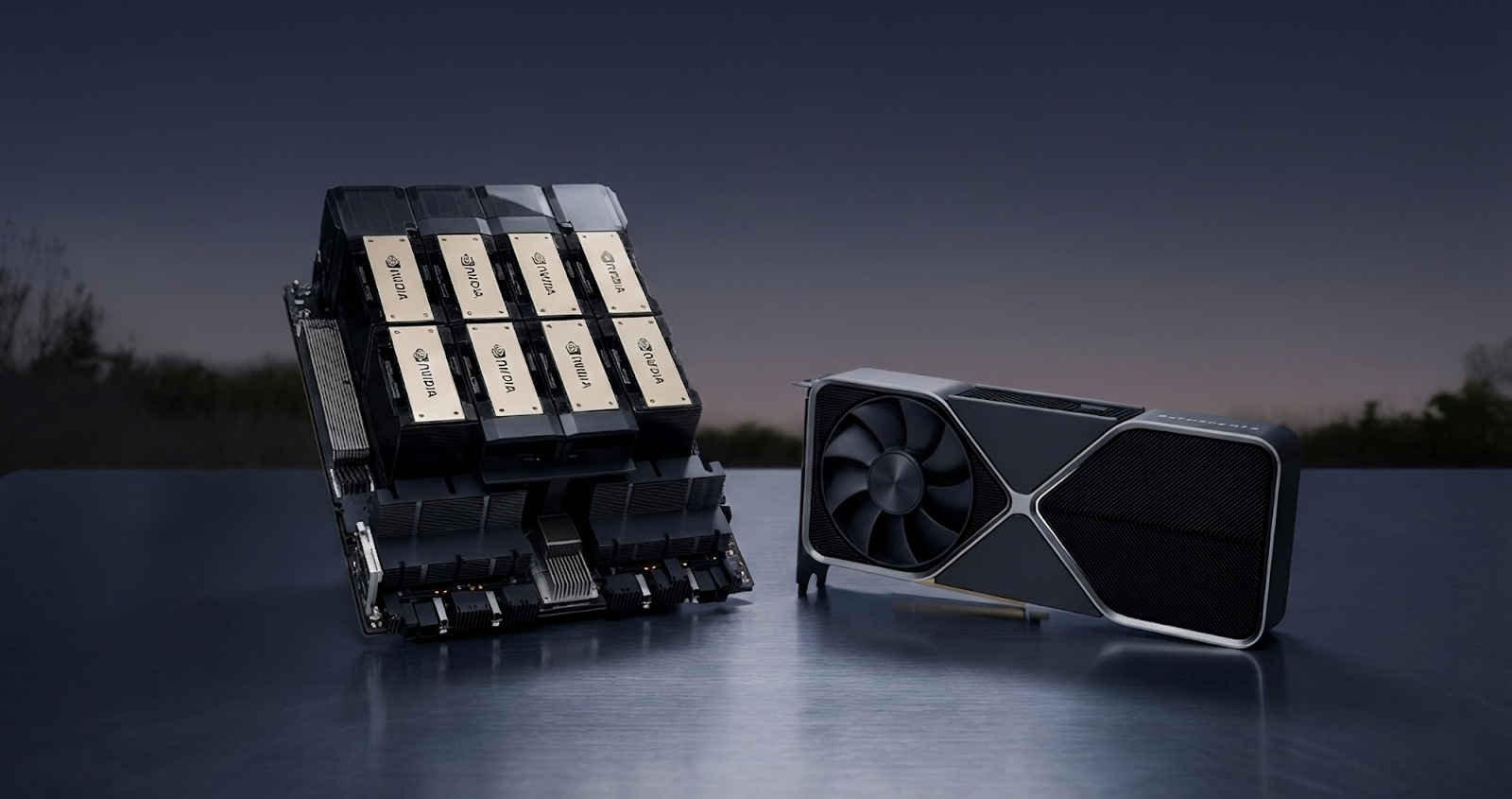

You see a shiny new gaming graphics card like the NVIDIA RTX 5090 and think:

“This is super powerful — perfect for running AI stuff!”

And you’re not wrong… but also not completely right.

Gaming GPUs and AI GPUs (also called datacenter GPUs) may look similar, but they’re built for very different jobs.

Think of it like cars:

- Gaming GPU → A fast sports car

- Amazing speed

- Looks great

- Perfect for games and short bursts of power

- AI GPU → A heavy-duty truck

- Built to carry massive loads

- Runs nonstop

- Perfect for training huge AI models

Let’s break it down the easy way.

What They’re Made For

Gaming GPUs

(RTX 5090, RTX 4090, AMD Radeon RX series)

Designed to make games look incredible by:

- Drawing millions of pixels instantly

- Creating realistic lighting and shadows (ray tracing)

- Delivering smooth, high-FPS gameplay

They’re optimized for visual performance and responsiveness.

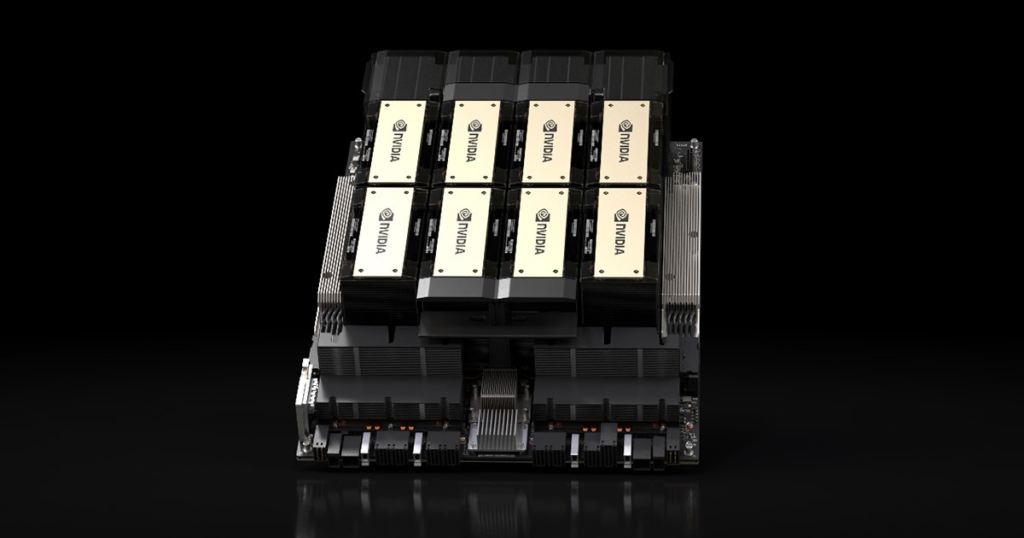

AI / Datacenter GPUs

(NVIDIA H200, A100, AMD MI300X)

Designed to:

- Perform billions of math calculations at once

- Train and run massive AI models

- Handle huge datasets efficiently

These GPUs focus on raw compute power, memory, and efficiency.

The Big Differences (Simple Table)

| Feature | Gaming GPU (RTX 5090) | AI GPU (H200 / MI300X) | Why It Matters |

|---|---|---|---|

| Memory (VRAM) | 24–48 GB | 141–192 GB | AI models are huge and need lots of space |

| Memory Speed | ~1–2 TB/s | 4–6 TB/s | Faster data movement = faster training |

| AI-Specific Hardware | Helpful, but limited | Extremely powerful AI cores | Massive boost for AI math |

| Multi-GPU Scaling | 2–4 GPUs | 8+ GPUs with ultra-fast links | Big AI jobs run on many GPUs together |

| Power & Cooling | 400–600W, home-friendly | 700W+, datacenter cooling | AI runs nonstop for days or weeks |

| Price | $1,500–$3,000 | $15,000–$40,000 per card | Datacenter power comes at a cost |

When a Gaming GPU Is Totally Fine

A gaming GPU is more than enough if you’re:

- Running small AI chatbots locally

- Generating images or videos at home

- Experimenting with AI tools

- Learning or testing ideas

- Working on a limited budget

A top gaming card like the RTX 5090 is:

- Extremely powerful

- Much more affordable

- Perfect for individual creators and developers

When You Really Need an AI GPU

You’ll want an AI/datacenter GPU if you’re:

- Training giant AI models (LLMs, vision models, etc.)

- Running AI 24/7 for a business

- Working with datasets that don’t fit in gaming-grade VRAM

- Scaling across many GPUs at once

This is why companies like OpenAI, Google, and Meta use thousands of AI GPUs in massive data centers — they’re built for nonstop heavy lifting.

Bottom Line

Both are powerful — but they’re built for different jobs:

- Want beautiful games and some AI experiments?

→ Get a gaming GPU - Building the next big AI platform?

→ You need datacenter / AI GPUs

Gaming GPUs are getting better at AI every year, but for the biggest, toughest workloads, AI GPUs still win — by a lot.